Sections

Elements of Machine Learning II: Automated classifier optimisation

[avatar user=”malm” size=”small” align=”left” link=”file” /]

One of the core features of the Python sklearn machine learning framework is the provision of a standard ‘estimator’ and ‘model’ interfaces for working with classification algorithms or ‘classifiers’ as they are typically termed. Examples of sklearn classifiers include Support Vector Machines, Logistic Regression and Decision Trees. These can be treated as essentially interchangeable within the context of a classification pipeline that might additionally include a ‘transformer’ interface for data modification. If used naively without any optimisation, figuring out the best performing classifier for a particular dataset and context can be a matter of lots of trial and error.

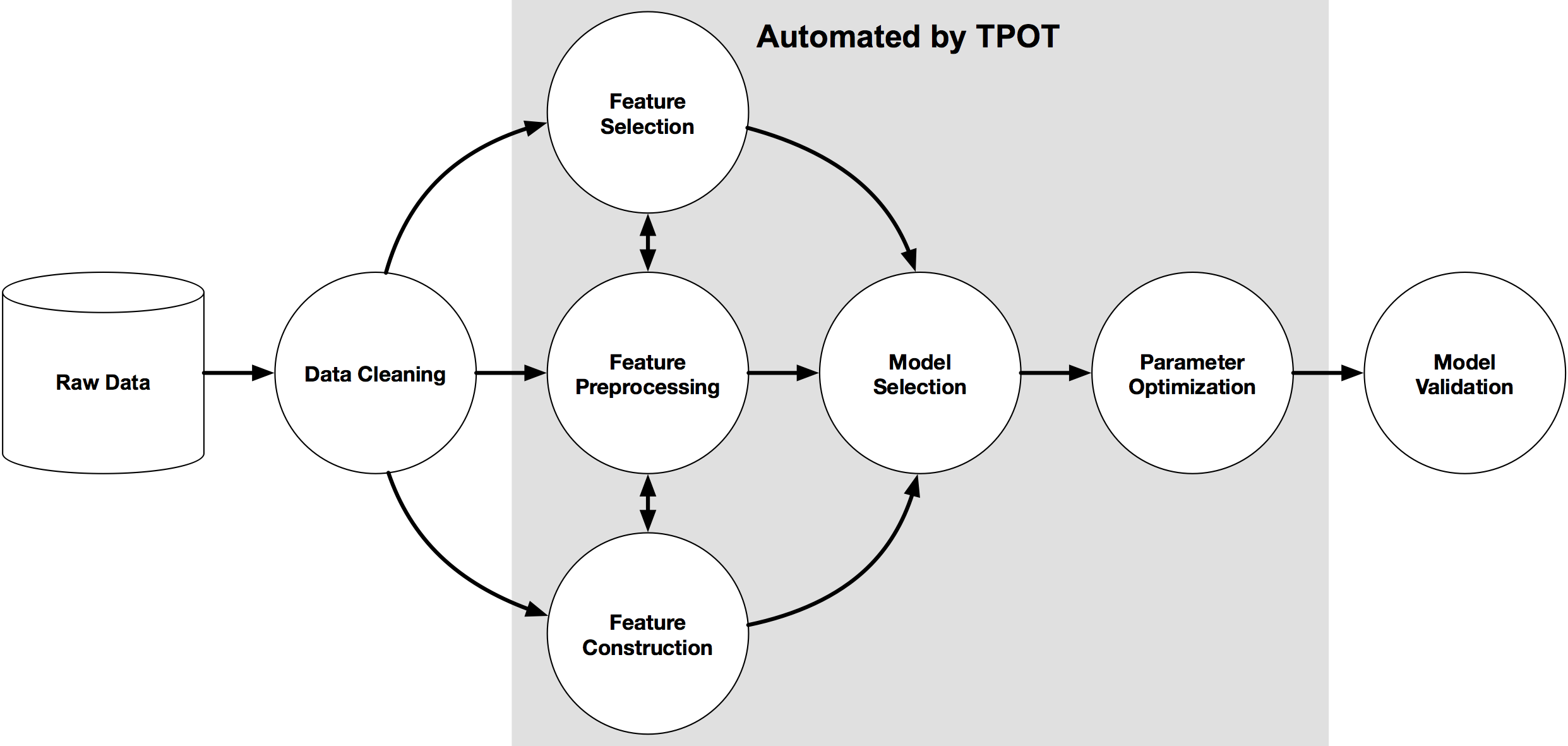

Enter TPOT which is billed “a python tool for automating data science”. It’s a powerful utility tool on top of Python sklearn functionality which handles the grunt work of determining the optimal pipeline for producing the best results from a dataset split into training and test data. You can think of it as a smarter assisted version of GridSearchCV:

TPOT will automate the most tedious part of machine learning by intelligently exploring thousands of possible pipelines to find the best one for your data.

TPOT has a dependency on the xgboost library which is fairly tricky to build on Windows so if you’re going to try this out, best to do so in a Linux environment where all you need to do as groundwork on top of a standard Python sklearn setup is: pip install tpot. You are then good to run this script, slightly adapted from the one presented in the source article:

import pandas as pd

from sklearn.cross_validation import train_test_split

from tpot import TPOT

import time

output = 'exported_pipeline.py'

t0 = time.time()

fname = 'https://raw.githubusercontent.com/rhiever/Data-Analysis-and-Machine-Learning-Projects/master/tpot-demo/Hill_Valley_with_noise.csv.gz'

print("1. Reading '%s'" % fname)

data = pd.read_csv(fname, sep='\t', compression='gzip')

print("2. Generating X,y and training + test data")

X = data.drop('class', axis=1).values

y = data.loc[:, 'class'].values

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.75, test_size=0.25)

print("3. teapot brewing - will take a few mins")

teapot = TPOT(generations=10)

teapot.fit(X_train, y_train)

print("4. Score and export pipeline")

print(teapot.score(X_test, y_test))

teapot.export(output)

t1 = time.time()

print("Finished in %d seconds! Optimal pipeline in '%s'" % (t1-t0, output))

After some 20 minutes or so in an Ubuntu VM, this should result in the following output:

1. Reading 'https://raw.githubusercontent.com/rhiever/Data-Analysis-and-Machine-Learning-Projects/master/tpot-demo/Hill_Valley_with_noise.csv.gz' 2. Generating X,y and training + test data 3. teapot brewing - will take a few mins 4. Score and export pipeline 0.960592769803 Finished in 1344 seconds! Optimal pipeline in 'exported_pipeline.py'

where the generated exported_pipeline.py reveals the optimal supervised learning classifier to be a logistic regression with regularisation parameter C = 299.54. Here’s a cleaned up version for direct comparison which takes a few seconds to run:

import numpy as np

import pandas as pd

from sklearn.cross_validation import train_test_split

from sklearn.linear_model import LogisticRegression

fname = 'Hill_Valley_with_noise.csv.gz'

# NOTE: Make sure that the class is labeled 'class' in the data file

data = pd.read_csv(fname, sep='\t', compression='gzip')

X = data.drop('class', axis=1).values

y = data.loc[:, 'class'].values

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.75, test_size=0.25)

# Perform classification with a logistic regression classifier

lrc1 = LogisticRegression(C=299.545454545)

lrc1.fit(X_train, y_train)

print(lrc1.score(X_test, y_test))

What TPOT does is essentially automate a large chunk of the process of machine learning classifier pipeline development. Essentially instead of using intuition and know-how to explore the search space, you opt for sheer brute force instead. If you’re someone who uses sklearn as your go-to machine learning prototyping tool, you probably want to get familiar with tpot as it looks to be an essential tool:

The Uber for X Landscape

This Guardian long read on how Uber went from a single hire in Feb 2012 to total dominance of the London taxi transportation network is a textbook lesson in the power of disruption. Four years ago, the London cab scene was effectively a complex closed shop with black cab drivers resting on the laurels of their three-year Knowledge acquisition and a few private firms like Addison Lee sewing up the rest of the market. The entry of Uber into the market appeared of little obvious concern and most of the competition continued to follow a well-worn playbook and fail to understand let alone compete with what was “only an app” which is why this isn’t really all that surprising:

“We were worried that Addison Lee [4,500 cars and revenues of £90m a year] would get smart, spend £1m – which isn’t a lot of money for them – and make a really nice, seamless app that copied Uber’s. But they never did.”

Today, Uber has transformed the taxi dynamic in London and pushed out to other UK cities. Its spectacular success has fuelled a global appetite to build parallel “Uber for X” propositions over the last few years. There are signs these ventures are now experiencing something of a backlash in large part because they often rely on what is arguably an unsustainable zero-hours, low-paid precariat workforce. Nowhere more so than Uber itself where the main service innovation in the last couple of years has been in building even lower cost variants, UberX and UberPool, which further exacerbate tensions between the company and its drivers. Other entrepreneurs have taken heed and looked at doing something very different that elevates employee welfare concerns:

As tensions build, a smaller wave of on-demand entrepreneurs is finding an opportunity inside an opportunity – to be the “anti-Uber for X”.

Nevertheless, on-demand services continue to be developed at a furious pace with the latest trend to interface with users through a conversational UI paradigm:

big companies like Facebook, Apple, and Microsoft are now eager to host our interactions with various services, and offer tools for developers to make those services available. Chatbots easily fit into their larger business models of advertising, e-commerce, online services, and device sales.

As explained recently in a recent post in this blog, such chatbots today frequently serve as an interface onto a largely human-powered backend, particularly if they are able to handle the servicing of multiple different verticals. Pure Artificial Intelligence-based chatbot propositions remain the holy grail but there are few outfits outside of the GAFA quartet that have the competence to do a comparable job to Google Now or Siri. One possible contender is Viv which has been built by the ex-Siri team. WashPo’s absorbing profile of Viv is essential reading for anyone interested in the space.

More Machine Learning

- Rescale offer TensorFlow models in the cloud on AWS instance with GPU.

- Neural networks are “impressively good at compression“. This article helps explain why. It essentially outlines how neural nets operate as autoencoders.

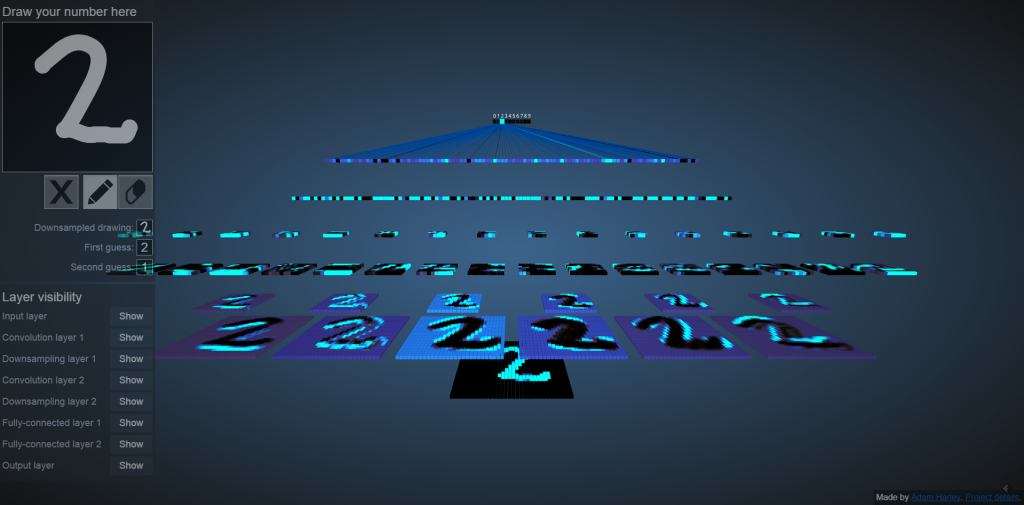

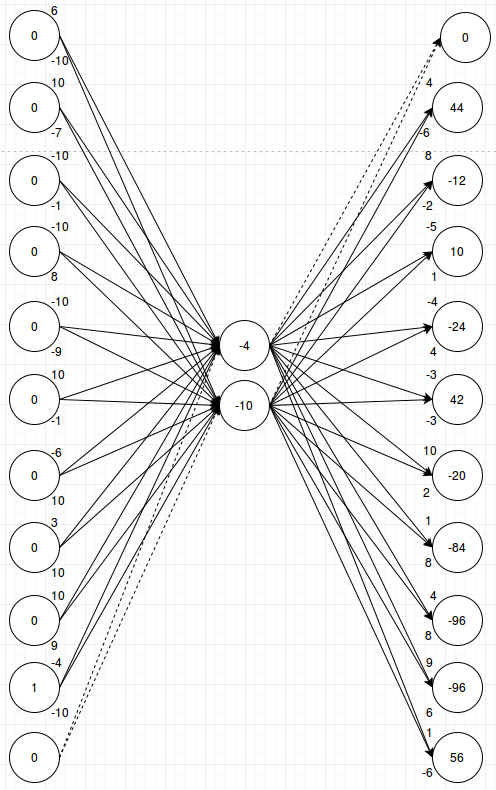

- Visualisation of the hidden layers of a neural network illustrates how handwriting recognition is handled by a couple of convolutional layers with compression followed by two fully connected layers.

- Algorithmic air traffic control:

“In recent year, the Gulf States have stopped using children as jockeys – and instead switched to robot ones after criticism from human rights organizations, who reported child were being killed on the camel racing-circuits.”

Wearables, Devices and Manufacturers

- This story of how Apple Music extends its long reach right into your laptop to delete local content and upload it to their cloud offers a cautionary tale for our times. The author suggests that in serving as the ultimate gateway for your content, cloud media services stand at the cusp of ushering in an Orwellian future in which they control the media users can access:

When I signed up for Apple Music, iTunes evaluated my massive collection of Mp3s and WAV files, scanned Apple’s database for what it considered matches, then removed the original files from my internal hard drive. REMOVED them. Deleted. If Apple Music saw a file it didn’t recognize—which came up often, since I’m a freelance composer and have many music files that I created myself—it would then download it to Apple’s database, delete it from my hard drive, and serve it back to me when I wanted to listen, just like it would with my other music files it had deleted.

- And this world is already coming to pass. In a post ironically entitled What Apple has to Fear from China, the New Yorker highlights the ever rising spectre of Western companies being closed out of involvement in the region’s internet economy which has the side effect of giving domestic firms a huge step up against foreign companies:

The pace at which China is building its walled-off world, filled with hardware and software created by Chinese companies, with only selectively allowed non-Chinese content, is accelerating.

- NYT on how Brooklyn is arguably the centre of gravity for a global fashion wearables scene built on innovative materials and 3D printing.

- Heritage audio OEM Bowers and Wilkins with 1000 employees has sold out to a tiny Silicon Valley startup in what looks to be a potentially interesting acquisition.

- The new £8 million Dyson Centre for Engineering Design at Cambridge University opened for business this week. Together with the £12 million Dyson School of Design Engineering at Imperial College and other generous bursaries, James Dyson is arguably the most important single influence in elite engineering in the UK. The man himself is on characteristically pugnacious form in this Telegraph article:

“It’s economic suicide that we’re not creating more engineers. They create the technologies that Britain can export, generating wealth for the nation. But we’re facing a chronic shortage of them.”

- UK smart educational toys startup Sam Labs has raised £3.2million in VC funding to “turn kids into inventors”.

Cloud and Digital Innovation

- It’s Disunited Nations as the UN finds itself in Enterprise Software bureaucracy hell. They could have built a company out of the money spent to deliver the Umoja collaboration (sic) suite.

- The Information on Mike Clayville, Amazon’s chief Enterprise AWS revenue wrangler.

- Salesforce’s strategy is to target “non-coding developers”. New platform chief Adam Seligman is playing to a different tune:

“We have 2.6 million developers registered so far. I want it to be 100 million. If we don’t look like other platforms, it’s because I think we’re playing a different game,”

- How to troubleshoot troublemakers comes with this amusing cut out and keep guide to recognising the major types in a company near you:

- The Hermit: Regardless of the team’s established process, workflow or deadlines, he finds ways to hide his work until he deems it suitable to share.

- The Nostalgia Junkie: “Too bad you weren’t here a few years ago. It was great.“

- The Trend Chaser: “I’ve read about this on Twitter and I’m installing it“

- The Smartest in the Room: this troublemaker feeds off her environment and the reactions of others

- McKinsey on the need to lead in data and analytics and why it’s mostly about senior management buy in and organisational architecture:

some of the biggest qualitative differences between high- and low-performing companies, according to respondents, relate to the leadership and organization of analytics activities. High-performer executives most often rank senior-management involvement as the factor that has contributed the most to their analytics success; the low-performer executives say their biggest challenge is designing the right organizational structure to support analytics

- McKinsey again on an operating model for company-wide agile development suggesting that it too is also mostly down to organisational structure. Mindset is surely critical as well though not emphasised in this material:

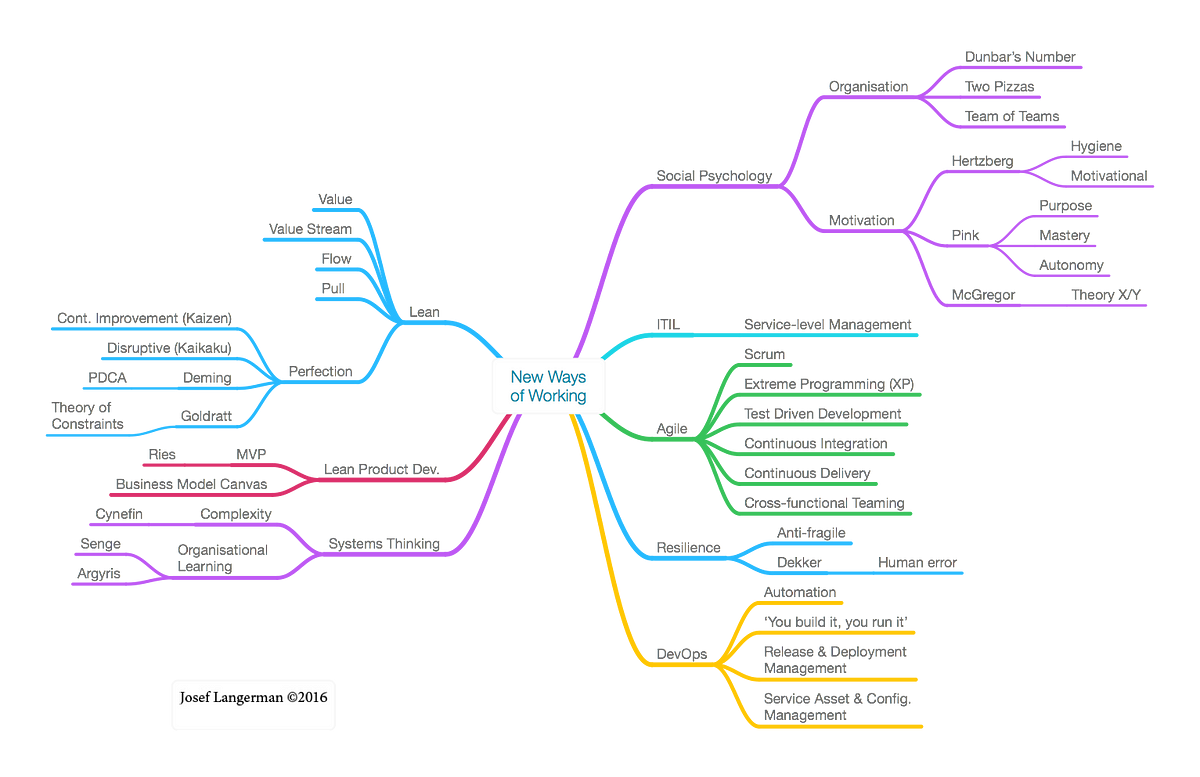

- This Medium post on Agile, IT and devops suggests the new mindset and way of working is beginning to percolate downwards perhaps as a result of dawning awareness of the existential dangers of ignoring this mind map of the new territory:

- Quartz on those existential perils and the signs that reveal whether your company is relying on old remedies for new corporate challenges or really trying to get out of jail. One of the solutions outlined is the use of corporate VC to bring in new thinking:

most companies today will not live beyond their fiftieth birthday. John Chambers, ex-CEO of Cisco, believes that 40% of today’s leading companies will not survive another decade. … As it becomes increasingly challenging to envision the future, conventional diagnoses and remedies that have led to past achievements just don’t apply any more. Previous success can lead to “a collective cognitive myopia,” explains London Business School’s Gary Dushnitsky. “It’s hard to see beyond something that you’ve excelled at for the last 15 or 20 years.

Software Engineering

- Things I wish someone had told me when I was learning how to code include the fact that perseverance is all:

I’ve found that a big difference between new coders and experienced coders is faith: faith that things are going wrong for a logical and discoverable reason, faith that problems are fixable, faith that there is a way to accomplish the goal. The path from “not working” to “working” might not be obvious, but with patience you can usually find it.

- This OReilly course on Python beyond the Basics looks good.

- KnightOS exists because that TI-30 calculator you know you still have in a drawer somewhere could be running something else:

KnightOS is a free and open source firmware for calculators, namely Texas Instruments calculators. It’s written in assembly, and projects that support it are written in C, Python, JavaScript, LaTeX, etc. It is the first mature, open source operating system for calculators and it takes that seriously.

- How to use Maths words to sound smart. I’ve actually used this before on several occasions and just ended up confusing people. YMMV.

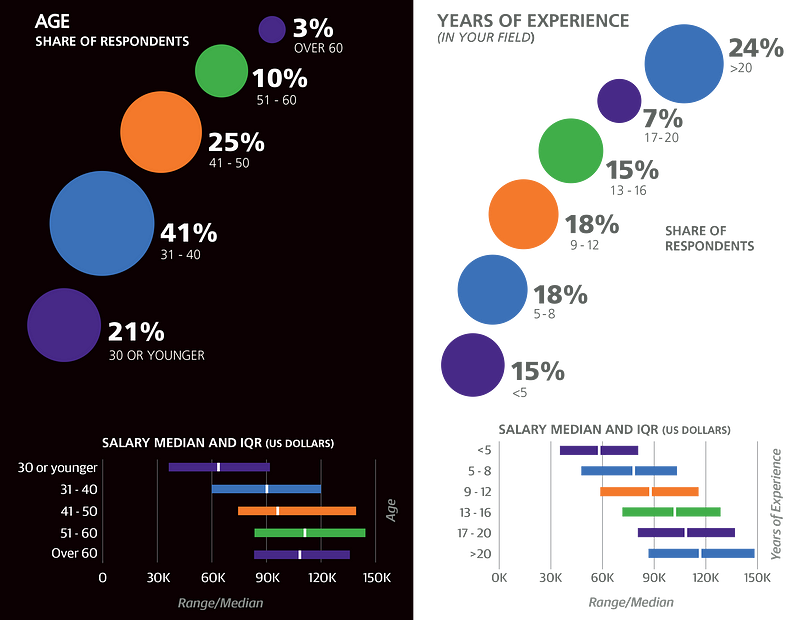

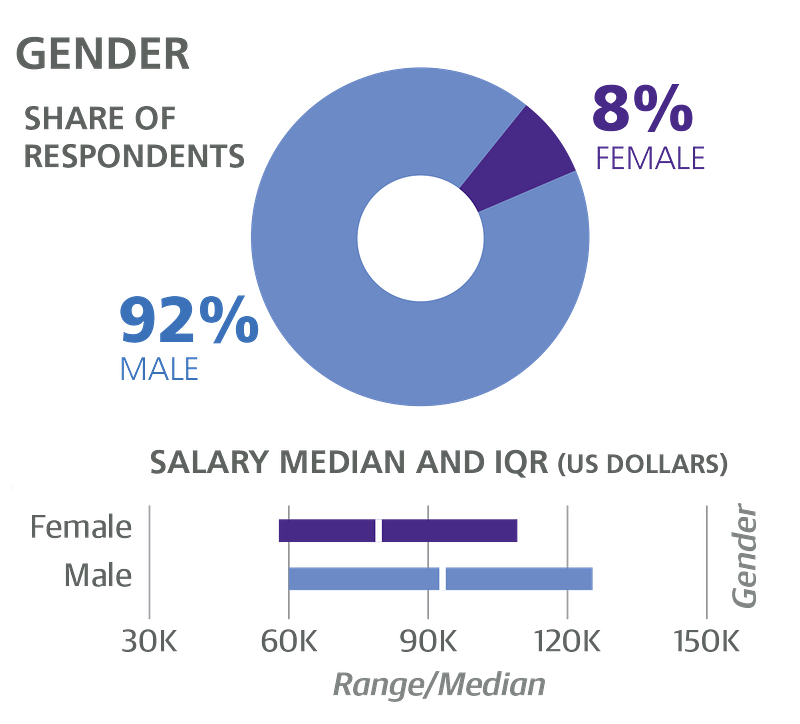

- 5000 developers talk about their salary. Some fascinating stats in this OReilly US survey including this revelation that employers do on the whole value age and experience:

- The survey includes some depressing stats outlining the representation and remuneration challenges women working in software development still face. And as if that wasn’t bad enough, there is a shocking degree of normalisation around totally unacceptable threats and intimidation that women routinely face daily. No-one should ever have to endure that experience just for doing their job. It seems that the whole industry is in something approaching collective denial over the extent of the problem.

Society and Culture

- Good post on TheConversation outlining how computing technology aimed at making the lives of Western consumers easier and more productive is also increasingly being used to support global terrorism and crime:

Having researched cybercrime and technology use among criminal populations for more than a decade, I have seen firsthand that throwaway phones are just one piece of the ever-widening technological arsenal of extremists and terror groups of all kinds. Computers, smartphones and tablets also draw people into a movement, indoctrinate them and coordinate various parts of an attack, making technology a fundamental component of modern terrorism.

- The Atlantic on “why luck matters more than you might think” in explaining success. The reason it’s important to acknowledge the distinction in one’s personal life is that the pernicious effect of hindsight bias tends to make us mistake luck for skill:

a growing body of evidence suggests that seeing ourselves as self-made—rather than as talented, hardworking, and lucky—leads us to be less generous and public-spirited. It may even make the lucky less likely to support the conditions (such as high-quality public infrastructure and education) that made their own success possible.

- The incomparable Douglas Adams gave a lecture at Cambridge in 1998 on the concept of an “artificial god” back in 1998:

I suspect that as we move further and further into the field of digital or artificial life we will find more and more unexpected properties begin to emerge out of what we see happening and that this is a precise parallel to the entities we create around ourselves to inform and shape our lives and enable us to work and live together.

Leicester City

- Leicester City’s incredible triumph in the English Premiership has already been labelled by some as “the greatest sporting story of all time“. It’s certainly brought much needed optimism and romance to a sport that had lost some of its lustre over the years with the influx of money seemingly determining who won. Their coronation late on Monday May 2nd happened as a result of Chelsea scoring two goals in an intense and violent second half game against Spurs that made for dramatic viewing:

I can't breathe.

— Gary Lineker (@GaryLineker) May 2, 2016

Football. Bloody hell.

— Leicester Mercury (@Leicester_Merc) May 2, 2016

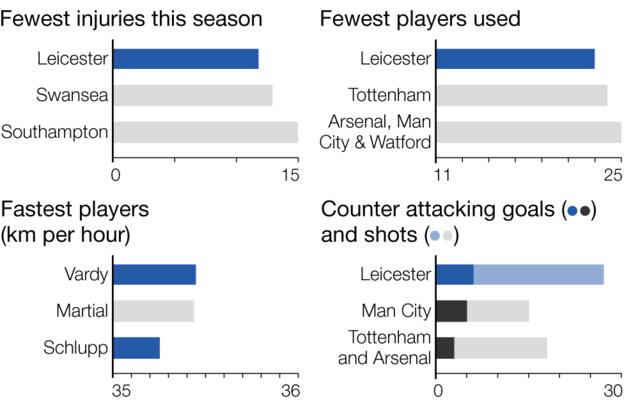

- A season doesn’t rest on one game though. In an attempt to rationalise how this ‘impossible’ 5000-1 modern miracle happened, various reasons have been supplied. They range from team spirit and manager Claudio Ranieri’s universally praised management approach to the application of scientific techniques to football particularly in respect of recovery. With a nod to the last section in the blog, however, part of it was down to sheer luck with injuries and fixtures. And counterattacking:

- The Guardian provided this fascinating inside story on the campaign and even the Economist felt fit to wade in with their explanation.

- On a personal level, I was born and grew up in Leicester on a diet that included Roy of the Rovers and the improbable exploits of Roy Race which had nothing on this story.

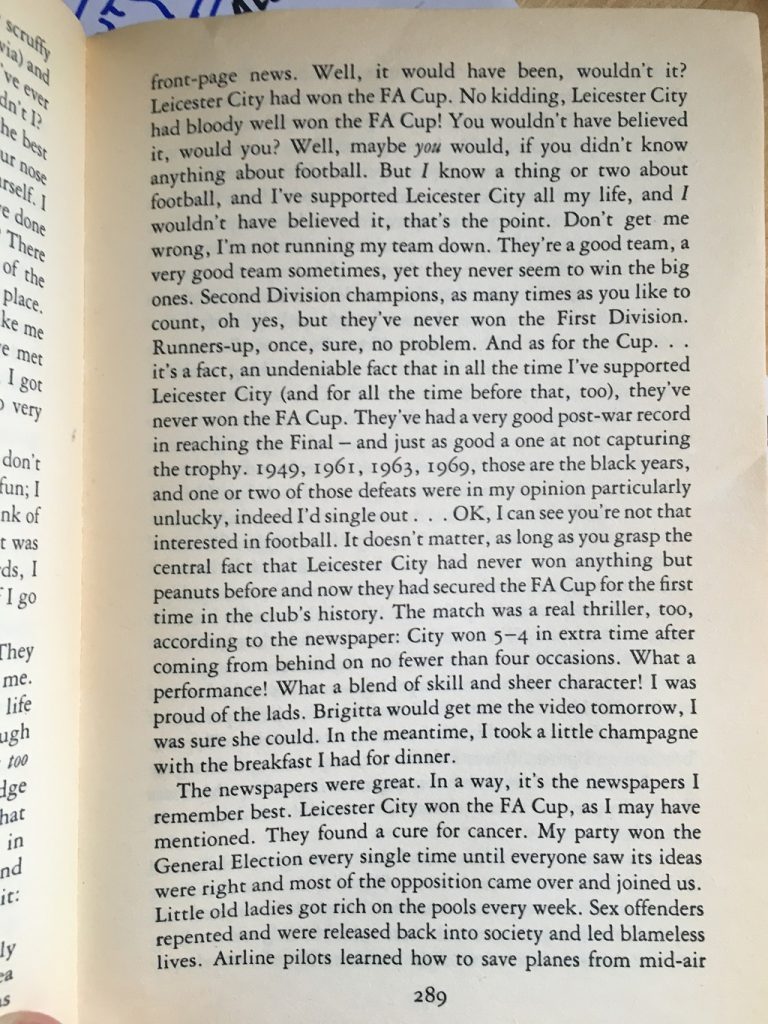

- Years later long-term life-long fan Julian Barnes wrote about Leicester City winning the FA Cup in History of the World in 10 and a Half Chapters. In a chapter called The Dream about an imagined future their win was positioned in the paper next to news of a cure for cancer. It helps situate how utterly inconceivable it is that Leicester City have won the Premier League and the “generalised delirium” of the week long party that’s been going on in a small East Midlands town. Nessun Dorma indeed:

- The last word should go to the maestro himself:

Trump

- Donald Trump has emerged from the Republican nomination campaign as last man standing and already triggered a mini-flight from the GOP by those who can’t or won’t support his candidacy. One suspects it will make little difference to the man or his prospects. NYT has already taken the liberty of imagining his first 100 days as US President. It’s not going to be BAU one suspects:

On his first day in office, he said, he would meet with Homeland Security officials, generals, and others — he did not mention diplomats — to take steps to seal the southern border and assign more security agents along it. He would also call the heads of companies like Pfizer, the Carrier Corporation, Ford and Nabisco and warn them that their products face 35 percent tariffs because they are moving jobs out of the country.

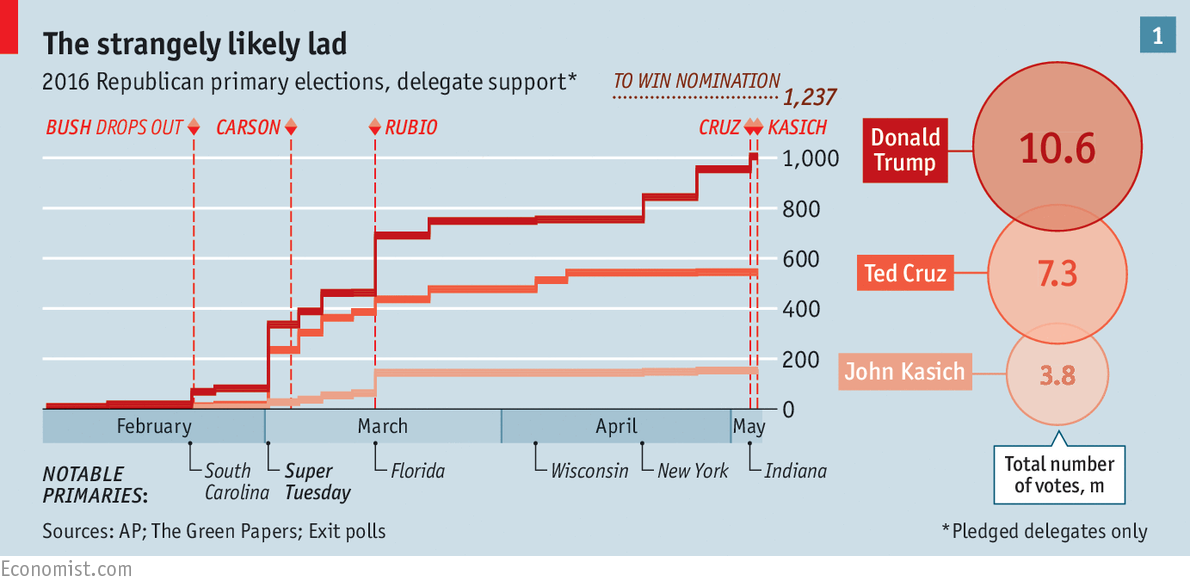

- Many respected commentators are deeply concerned at the developments. The Economist charted Trump’s grimly inevitable upward progress towards putative nomination and suggesting it represented a triumph of fear over hope and was “terrible news for Republicans, America and the world“.

If Mr Trump’s diagnosis of what ails America is bad, his prescriptions for fixing it are catastrophic. His signature promise is to wall off Mexico and make it pay for the bricks. Even ignoring the fact that America is seeing a net outflow of Mexicans across its southern border this is nonsense. Mexico has already refused to pay.

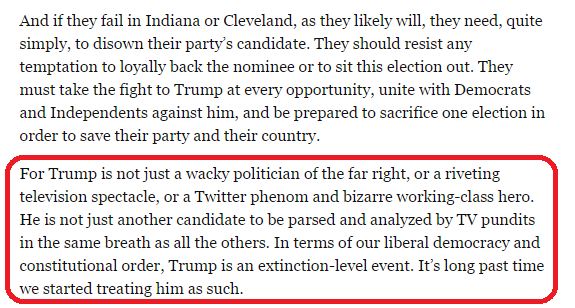

- NY Mag went further in a powerful article referencing fascism, tyranny, Plato and political elites. The author is bleak in his ultimate assessment of Trump – he represents an “extinction level event” for liberal democracy. Trouble is that the alternative isn’t that great either.

- Witness the disturbing story of Illma Gore and what it says about America:

For Gore, the recent violent attack by Trump supporters, which left her with severe facial bruising, is indicative of the election — and how the Republican is inciting violence while exposing deep divides in American society.